Docker has revolutionized the way applications are developed and deployed by making it easier to build, package, and distribute applications as self-contained units that can run consistently across different environments.

I’ve been using docker as a primary tool with my machine learning experiments for a while. If you interested in reading how sweet the docker + machine learning combo is; you can hop to my previous blog post here.

Docker is important in machine learning for several reasons:

- Reproducibility: In machine learning, reproducibility is important to ensure that the results obtained in one environment can be replicated in another environment. Docker makes it easy to create a consistent, reproducible environment by packaging all the necessary software and dependencies in a container. This ensures that the code and dependencies used in development, testing, and deployment are the same, which reduces the chances of errors and improves the reliability of the model.

- Portability: Docker containers are lightweight and can be easily moved between different environments, such as between a developer’s laptop and a production server. This makes it easier to deploy machine learning models in production, without worrying about differences in the underlying infrastructure.

- Scalability: Docker containers can be easily scaled up or down to handle changes in the demand for machine learning services. This allows machine learning applications to handle large amounts of data and processing requirements without requiring a lot of infrastructure.

- Collaboration: Docker makes it easy to share machine learning models and code with other researchers and developers. Docker images can be easily shared and used by others, which promotes collaboration and reduces the amount of time needed to set up a new development environment.

Overall, Docker simplifies the process of building, deploying, and managing machine learning applications, making it an important tool for data scientists and machine learning engineers.

Alright… Docker is important. How can we get started?

I’ll share the procedure I normally follow with my ML experiments. Then demonstrate the way we can containerize a simple ML experiment using docker. You can use that as a template for your ML workloads with some tweaks accordingly.

I use python as my main programming language and for deep learning experiments, I use PyTorch deep learning framework. So that’s a lot of work with CUDA which I need to work a lot with configuring the correct environment for model development and training.

Since most of us use anaconda as the dev framework, you may have the experience with managing different virtual environments for different experiments with different package versions. Yes. That’s one option, but it is not that easy when we are dealing with GPUs and different hardware configurations.

In order to make my life easy with ML experiments, I always use docker and containerize my experiments. It’s clean and leave no unwanted platform conflicts on my development rig and even on my training cluster.

Yes! I use Ubuntu as my OS. I’m pretty sure you can do this on your Mac and also in the Windows PC (with bit of workarounds I guess)

All you need to get started is installing docker runtime in your workstation. Then start containerizing!

Here’s the steps I do follow:

- I always try to use the latest (but stable) package versions in my experiments.

- After making sure I know all the packages I’m going to use within the experiment, I start listing down those in the

environment.ymlfile. (I use mamba as the package manager – which is similar to conda but bit faster than that) - Keep all the package listing on the

environment.ymlfile (this makes it lot easier to manage) - Keep my data sources on the local (including those in the docker image itself makes it bulky and hard to manage)

- Configure my experiments to write its logs/ results to a local directory (In the shared template, that’s the

resultsdirectory) - Mount the

dataandresultsto the docker image. (it allows me to access the results and data even after killing the image) - Use a bash script to build and run the docker container with the required arguments. (In my case I like to keep it as a

.shfile in the experiment directory itself)

In the example I’ve shared here, a simple MNIST classification experiment has been containerized and run on a GPU based environment.

Github repo : https://github.com/haritha91/mnist-docker-example

I used a ubuntu 20.04 base image from nvidia with CUDA 11.1 runtime. The package manager used here is mamba with python 3.8.

FROM nvidia/cuda:11.1.1-base-ubuntu20.04

# Remove any third-party apt sources to avoid issues with expiring keys.

RUN rm -f /etc/apt/sources.list.d/*.list

# Setup timezone (for tzdata dependency install)

ENV TZ=Australia/Melbourne

RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime

# Install some basic utilities and dependencies.

RUN apt-get update && apt-get install -y \

curl \

ca-certificates \

sudo \

git \

bzip2 \

libx11-6 \

libgl1 libsm6 libxext6 libglib2.0-0 \

&& rm -rf /var/lib/apt/lists/*

# Create a working directory.

RUN mkdir /app

WORKDIR /app

# Create a non-root user and switch to it.

RUN adduser --disabled-password --gecos '' --shell /bin/bash user \

&& chown -R user:user /app

RUN echo "user ALL=(ALL) NOPASSWD:ALL" > /etc/sudoers.d/90-user

USER user

# All users can use /home/user as their home directory.

ENV HOME=/home/user

RUN chmod 777 /home/user

# Create data directory

RUN sudo mkdir /app/data && sudo chown user:user /app/data

# Create results directory

RUN sudo mkdir /app/results && sudo chown user:user /app/results

# Install Mambaforge and Python 3.8.

ENV CONDA_AUTO_UPDATE_CONDA=false

ENV PATH=/home/user/mambaforge/bin:$PATH

RUN curl -sLo ~/mambaforge.sh https://github.com/conda-forge/miniforge/releases/download/4.9.2-7/Mambaforge-4.9.2-7-Linux-x86_64.sh \

&& chmod +x ~/mambaforge.sh \

&& ~/mambaforge.sh -b -p ~/mambaforge \

&& rm ~/mambaforge.sh \

&& mamba clean -ya

# Install project requirements.

COPY --chown=user:user environment.yml /app/environment.yml

RUN mamba env update -n base -f environment.yml \

&& mamba clean -ya

# Copy source code into the image

COPY --chown=user:user . /app

# Set the default command to python3.

CMD ["python3"]

In the beginning you may see this as an extra burden. Trust me, when your experiments get complex and you start working with different ML projects parallelly, docker is the lifesaver you have and it’s going to save you a lot of unnecessary time you waste on ground level configurations.

Happy coding!

Data is the king in machine learning. In the process of building machine learning models, data is used as the input features.

Data is the king in machine learning. In the process of building machine learning models, data is used as the input features. A common application of OHE is in Natural Language Processing (NLP). It can be used to turn words to vectors so easily. Here comes a con of OHE, where the vector size might get very large with respect to the number of distinct values in the feature column.If there’s only two distinct categories in the feature, no need to construct to additional columns. You can just replace the feature column with one Boolean column.

A common application of OHE is in Natural Language Processing (NLP). It can be used to turn words to vectors so easily. Here comes a con of OHE, where the vector size might get very large with respect to the number of distinct values in the feature column.If there’s only two distinct categories in the feature, no need to construct to additional columns. You can just replace the feature column with one Boolean column.

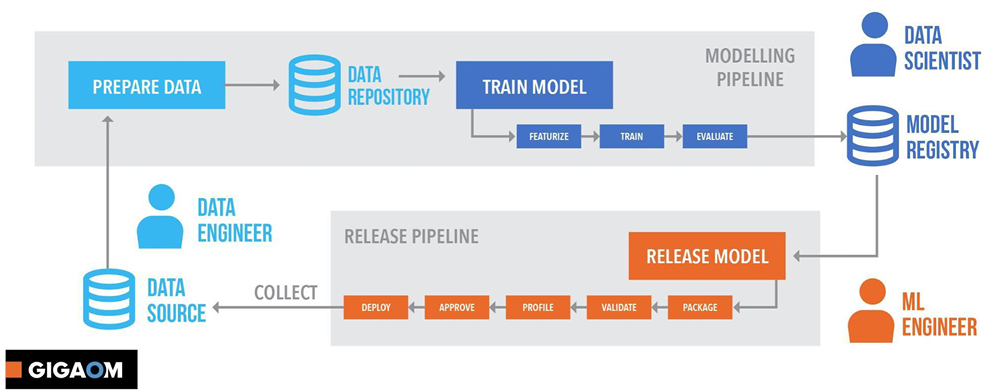

When it comes to a machine learning or data science related problem, the most difficult part would be finding out the best approach to cope up with the task. Simply to get the idea of where to start!

When it comes to a machine learning or data science related problem, the most difficult part would be finding out the best approach to cope up with the task. Simply to get the idea of where to start!