Data is the key component of machine learning. Thus, high quality training dataset is always the main success factor of a machine learning training process. A good enough dataset leads to more accurate model training, faster convergence as well as it’s the main deciding factor on model’s fairness and unbiases too.

Let’s discuss the dos and don’ts when selecting/ preparing a dataset for training a machine learning model and the factors we should consider when composing the training data. These are valid for structured numerical data as well as for unstructured data types such as images and videos.

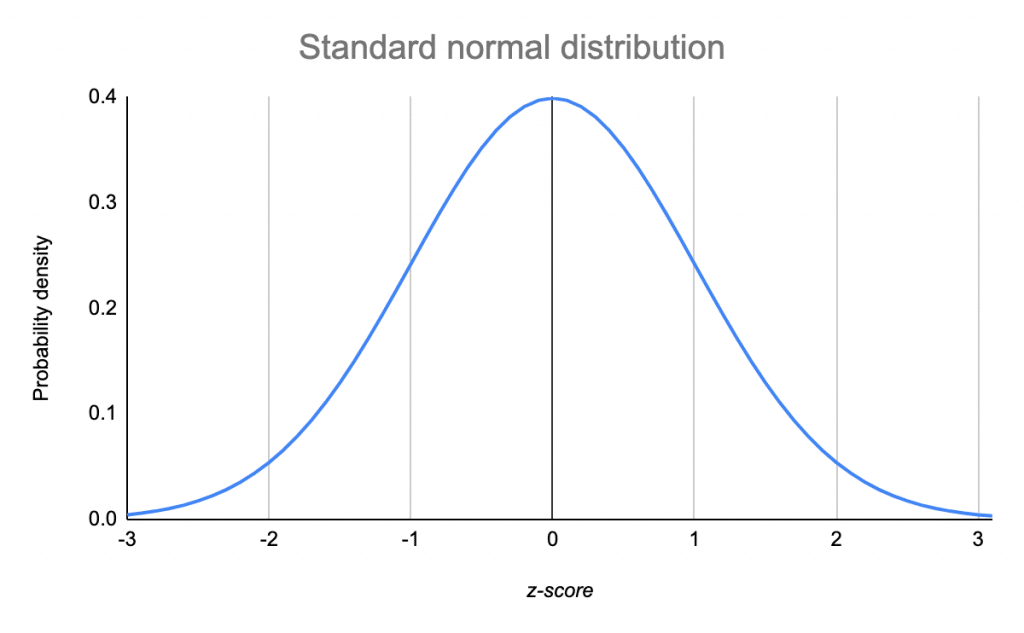

What does the dataset’s distribution look like?

This is important mostly with numerical datasets. Calculating and plotting the frequency distribution (how often each value occurs in the dataset) of a dataset leads us to the insights on the problem formation as well as on class distribution. ML engineers tend to have datasets with normal distribution to make sure they are having sufficient data points to train the models.

Though normal distribution is more common in nature and psychology, there’s no need of having a normal distribution on every dataset you use for model training. (Obviously some real-world data collections don’t fit for the noble bell curve).

Does the dataset represent the real world?

We are training machine learning models to solve real world problems. So, the data should be real too. It’s ok to use synthetic data if you are having no other option to collect more data or need to balance the classes, but always make sure to use the real -world data since it makes the model more robust on testing/ production. Please don’t put some random numbers into a machine learning model and expect that to solve your business problem with 90% accuracy 😉

Does the dataset match the context?

Always we must make sure the characteristics of the dataset used for training the model matches with the conditions we have when the model goes live in production. For example, will say we need to train a computer vision model for a mobile application which identify certain types of tree leaves from images captured from the mobile camera. There’s no use of training the model with images only captured in a lab environment. You should have images which are captured in wild (which is closely similar for the real-world use case of the application) in your training set.

Is the data redundant?

Data redundancy or data duplication is important point to pay attention when training ML models. If the dataset contains the same set of data points repeatedly, model overfits for that data points and will not perform well in testing. (mostly underfitting)

Is the dataset biased?

A bias dataset never produces an unbiased trained model. Always the dataset we choose should be balanced and not bias to certain cases.

Let’s get an example of having a supervised computer vision model which identifies the gender of people based on their face. Will assume the model is trained only with images from people from USA and we going to use it in an application which is used world-wide. The model will produce unrealistic predictions since the training context is bias to a certain ethnicity. To get a better outcome, the training set should have images from people from different ethnicities as well as from different age groups.

Which data is there too little/too much of?

“How much data we need to train a model with good accuracy?” – This is a question which comes out quite often when we planning ML projects. The simple answer is – “we don’t know!” 😀

There are no exact numbers on how much of data needed for training a ML model. We know that deep learning models are data hungry. Yes, we need to have large datasets for training deep neural networks since we are using those for representing non-linear complex relationships. Even with traditional machine learning algorithms, we should make sure to have enough data from all the classes even from the edge/ corner cases.

What will happen if we have too much data? – that doesn’t help at all. It only makes the training process lengthy and costly without getting the model into a good accuracy. This may end up producing an overfitted trained model too.

These are only very few points to consider when selecting a dataset for training a machine learning model. Please add your thoughts on dataset selection in comments.