The release of ChatGPT in November 2022 gave a mega boost for the world of AI. With that, everyone started playing around with Large Language Models (LLMs) and explore the possibilities of them.

While opening up interesting possibilities, new approaches and methodologies for interacting with LLMs came into the play. Prompt engineering is one of the major domains even the general public is interested in right now.

To get myself familiar with the context and not to feel left behind, I followed several online resources including the Prompt Engineering for developers from Deeplearning.ai. Here are some interesting points caught my attention. This brief would be helpful for anyone who’s using ChatGPT or any LLM not only in development scenarios, even for general daily chaos.

What’s actually Prompt Engineering?

Without any hesitation, I asked this question from ChatGPT 😀

Prompt engineering refers to the process of designing and refining prompts to elicit desired responses from a language model like ChatGPT. It involves crafting the initial instruction or query in a way that effectively communicates the desired task or information to the model. Prompt engineering can significantly influence the quality and relevance of the model’s responses.

ChatGPT

In simple terms,

Prompt engineering is the methodology of giving clear instructions to LLMs. It helps the language model to understand the instructions clearly and provide a better precise output.

LLMs in the GPT family (GPT-3, GPT3.5, GPT4 etc.) are trained to predict the next word occurrence of a given output. given that, the instructions we provide to the model should be specific and understandable. There are no hard bound rules for prompting the instructions, but the most precise would be better.

There are two key principles we should keep in mind when prompting.

- Write clear and specific instructions.

- Give the model time to “think”.

Be clear! Be Precise!

There are many tactics which we can follow in order to make out instructions clear and easy to understand for an LLM.

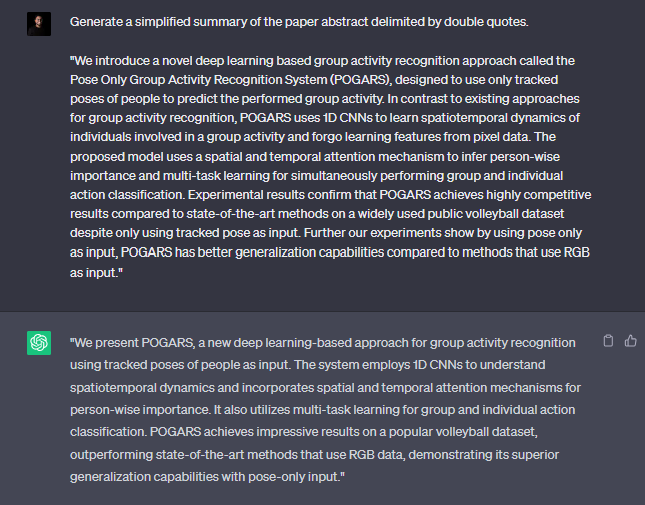

Use delimiters to indicate distinct parts of the input

Let’s get the example of using a GPT model to summarize a particular text. It’s always better to clearly indicate which text parts of the prompt is the instruction and which is the actual text to be summarized. You can use any delimiter you feel comfortable with. Here I’m using double quotes to determine the text to summarised.

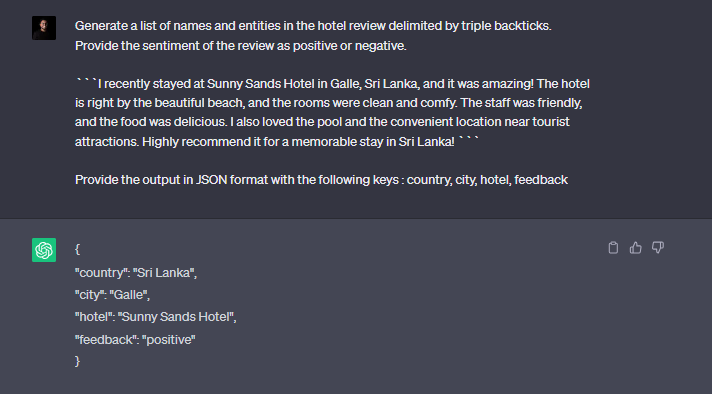

Ask for structured outputs

When it comes to LLM aided application development, we use OpenAI APIs to perform several natural language processing tasks. For an example we can use the GPT models to extract key entities, and the sentiment from a set of product reviews. When using the output of the model in a software system, it’s always easy to get the output from a structured format like JSON object or in HTML.

Here’s a prompt which gets feedback for a hotel as the input and gives a structured JSON output. This comes handy in many analytics scenarios and integrating OpenAI APIs in production environments.

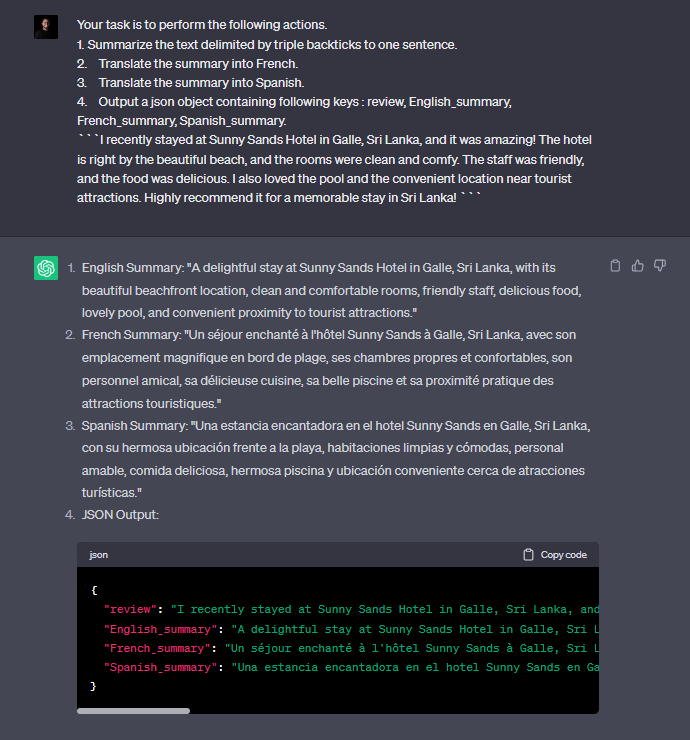

It’s always good to go step by step

Even for humans, it’s better to provide instructions in steps to complete a particular task. It works well with LLMs too. Here’s an example of performing a summarization and two translations for a customer review through a single prompt. Observe the structure of the prompt and how it’s guiding the LLM to the desired output. Make sure you construct your prompt as procedural instructions.

Here’s the output in JSON.

{

"review": "I recently stayed at Sunny Sands Hotel in Galle, Sri Lanka, and it was amazing! The hotel is right by the beautiful beach, and the rooms were clean and comfy. The staff was friendly, and the food was delicious. I also loved the pool and the convenient location near tourist attractions. Highly recommend it for a memorable stay in Sri Lanka!",

"English_summary": "A delightful stay at Sunny Sands Hotel in Galle, Sri Lanka, with its beautiful beachfront location, clean and comfortable rooms, friendly staff, delicious food, lovely pool, and convenient proximity to tourist attractions.",

"French_summary": "Un séjour enchanté à l'hôtel Sunny Sands à Galle, Sri Lanka, avec son emplacement magnifique en bord de plage, ses chambres propres et confortables, son personnel amical, sa délicieuse cuisine, sa belle piscine et sa proximité pratique des attractions touristiques.",

"Spanish_summary": "Una estancia encantadora en el hotel Sunny Sands en Galle, Sri Lanka, con su hermosa ubicación frente a la playa, habitaciones limpias y cómodas, personal amable, comida deliciosa, hermosa piscina y ubicación conveniente cerca de atracciones turísticas."

}

Hallucinations are one of the major limitations LLMs are having. Hallucination occurs when the model produces coherent-sounding, verbose but inaccurate information due to a lack of understanding of cause and effect. Following proper prompt methodologies and tactics can prevent hallucinations to some extend but not 100%.

It’s always the developer’s responsibility to develop AI systems follow the responsible AI principles and accountable for the process.

Feel free to share your experiences with prompt engineering and how you are using LLMs in your development scenarios.

Happy coding!