With the huge hype Retrieval Augmentation Generation (RAG) in the GenAI applications, vector databases play a vital role in these applications.

Azure AI Search is a leading cloud-based solution for indexing and querying a variety of data sources and a vector database, which is widely used in production-level applications.

Similar to any cloud resource, Azure AI Search has different pricing tiers and limitations. For instance, if you choose the Standard S1 pricing tier, you can create a maximum of 50 indexes.

Recently, a use case arose where I had to create more than 50 individual indexes in a single AI Search resource. It’s a use case for a RAG-based application for an e-library, where each book should be indexed and have a logical separation between them.

The easiest way forward would be to create a separate index inside the vector database for each book. This was not feasible since the maximum number of indexes in S1 was 50, more than the number of books in the library. Being cost-conscious, there was no room for going for Standard S2 or a higher pricing tier. The actual storage required for all the indexes was not even 100GB. So, S1 was the choice to go with an approach to having a separation between the embeddings of each book.

My approach was to add an additional metadata field for the index and make it a filterable field. Then, the book’s name can be added as a value, which can then be used to filter the particular book when we query it through the API.

The embedding was done using the ada-002 embedding model and GPT-4o was used as the foundational model within the application.

Let’s walk through how that was done with the aid of the LangChain framework.

01. Import required libraries

We use the LangChain Python library to orchestrate LLM-based operations within the application.

import os

from langchain_community.vectorstores.azuresearch import AzureSearch

from langchain_openai import AzureOpenAIEmbeddings

from langchain_community.document_loaders import DirectoryLoader

from azure.search.documents.indexes.models import (

SearchableField,

SearchField,

SearchFieldDataType,

SimpleField

)

02. Initiate the embedding model

The ada-002 model has been used as the embedding model of this application. You can use any embedding model of choice here.

embeddings: AzureOpenAIEmbeddings = AzureOpenAIEmbeddings(

azure_deployment= EMBEDDING_MODEL,

openai_api_version= AZURE_OPENAI_API_VERSION,

azure_endpoint= AZURE_OPENAI_ENDPOINT,

api_key= AZURE_OPENAI_API_KEY,

)

embedding_function = embeddings.embed_query

03. Create the structure of the index within the vector database

We create the structure of the index by configuring an additional metadata field for it. This should be filterable since we will filter the content in the index with the value of it.

index_fields = [

SimpleField(

name="id",

type=SearchFieldDataType.String,

key=True,

filterable=True,

),

SearchableField(

name="content",

type=SearchFieldDataType.String,

searchable=True,

),

SearchField(

name="content_vector",

type=SearchFieldDataType.Collection(SearchFieldDataType.Single),

searchable=True,

vector_search_dimensions=len(embedding_function("Text")),

vector_search_profile_name="myHnswProfile",

),

SearchableField(

name="metadata",

type=SearchFieldDataType.String,

searchable=True,

),

# Additional field to store the name of the book

SearchableField(

name="book_name",

type=SearchFieldDataType.String,

filterable=True,

)

]

04. Create the Azure AI Search vector database

We create the Azure AI Search vector database with custom field configurations that were initiated before.

vector_store: AzureSearch = AzureSearch(

azure_search_endpoint= AZURE_SEARCH_ENDPOINT,

azure_search_key= AZURE_SEARCH_KEY,

index_name= "index_of_books",

embedding_function= embedding_function,

fields = index_fields

)

05. Loading documents from a local directory

Any loader available in LangChain can be used to load the content. In this context, the pages array contains all the pages from a single book.

loader = DirectoryLoader("../", glob="**/*")

pages = loader.load()

06. Add the additional metadata field for each document object

The content of the book is read as LangChain documents. We should add a value for the custom filterable field that we initiated in the structure. We can assign the book’s name for that field by running through a simple loop. The value of it should be changed when the pages of a new book is loaded through the loader.

for page in pages:

metadata = page.metadata

metadata["book_name"] = "Oliver_Twist"

page.metadata = metadata

07. Adding the embeddings to the vector store

The customised content can be now added to the vector storage

vector_store.add_documents(pages)

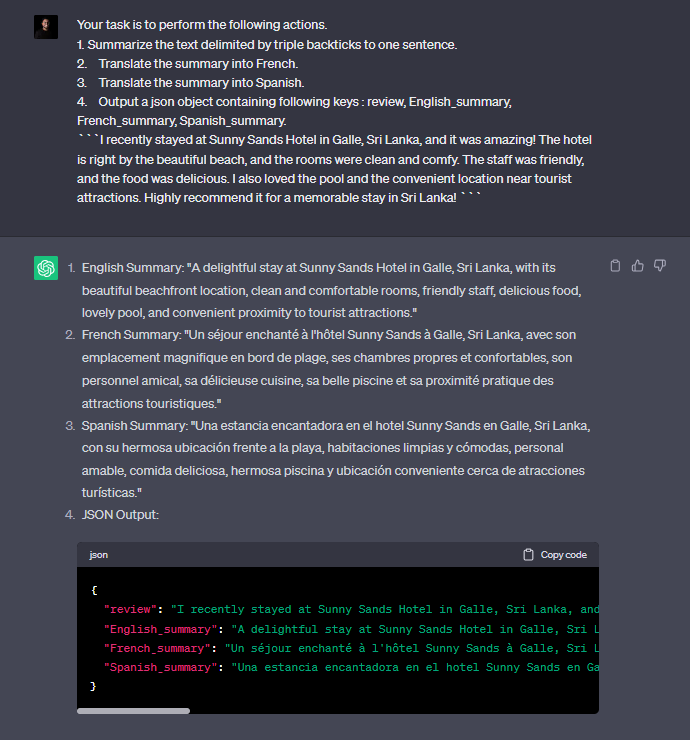

The Retrieval Process

As mentioned in the use case, the usage of adding a customised filterable field for the index is to retrieve the required documents only when answering a user query. For instance, in this use case if we only want to get answers from the book “Oliver Twist” we should only read the embeddings from that particular book. This can be done using the filter argument when parsing this through the OpenAI API. Here’s the sample body of the JSON request I sent for the API to get the filtered content. The filtration follows the OData $filter syntax.

{

"data_sources": [

{

"type": "azure_search",

"parameters": {

"filter": "book_name eq 'Oliver_Twist'",

"endpoint": "https://<SEARCH_RESOURCE>.search.windows.net",

"key": "<AZURE_SEARCH_KEY>",

"index_name": "index_of_books",

"semantic_configuration": "azureml-default",

"authentication": {

"type": "system_assigned_managed_identity",

"key": null

},

"embedding_dependency": null,

"query_type": "vector_simple_hybrid",

"in_scope": true,

"role_information": "You are an AI assistant find information from the books in the library.",

"strictness": 3,

"top_n_documents": 4,

"embedding_endpoint" : "<EMBEDDING_MODEL>",

"embedding_key": "<AZURE_OPENAI_API_KEY>"

}

}

],

"messages": [

{

"role": "system",

"content": "You are an AI assistant find information from the books in the library."

},

{

"role": "user",

"content": "Please provide me with the summary of the book."

}

],

"deployment": "gpt-4o",

"temperature": 0.7,

"top_p": 0.95,

"max_tokens": 800,

"stop": null,

"stream": false

}

Note that on line 6, we use the filter field to retrieve only the embeddings with the particular book name. Multiple filterable fields are also possible, and they can be used in complex applications. You should remember that using filterable fields makes the search a bit slow but convenient in the use cases where you need a logical separation and filtration capability for the embeddings within the vector store.

Happy to hear about interesting use cases you came up with similar patterns. 🙂